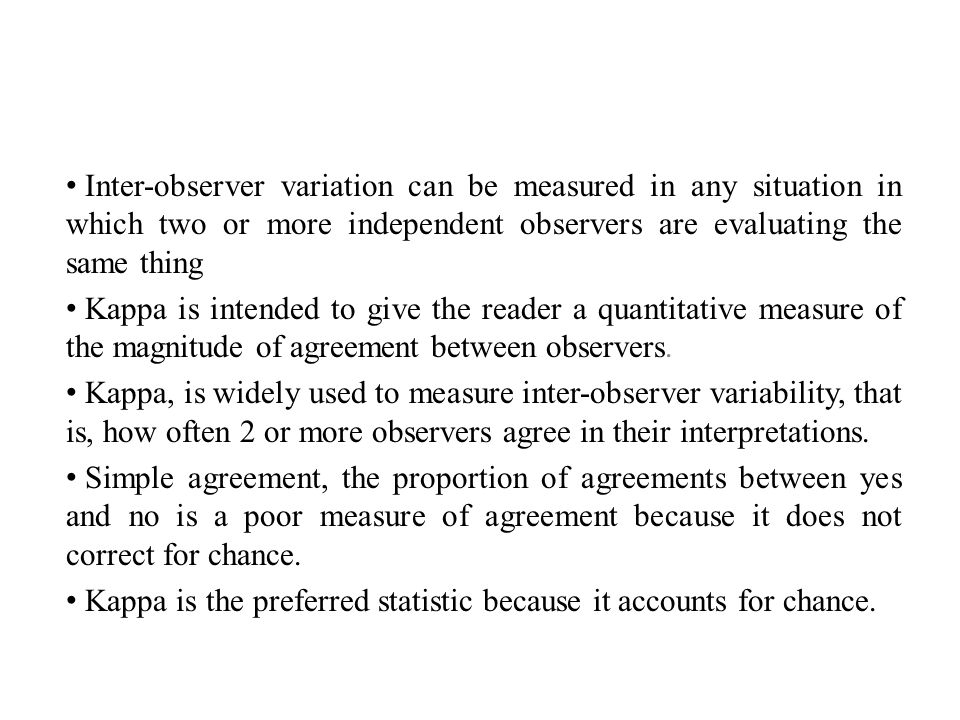

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

Inter-observer variation in the interpretation of chest radiographs for pneumonia in community-acquired lower respiratory tract infections - Clinical Radiology

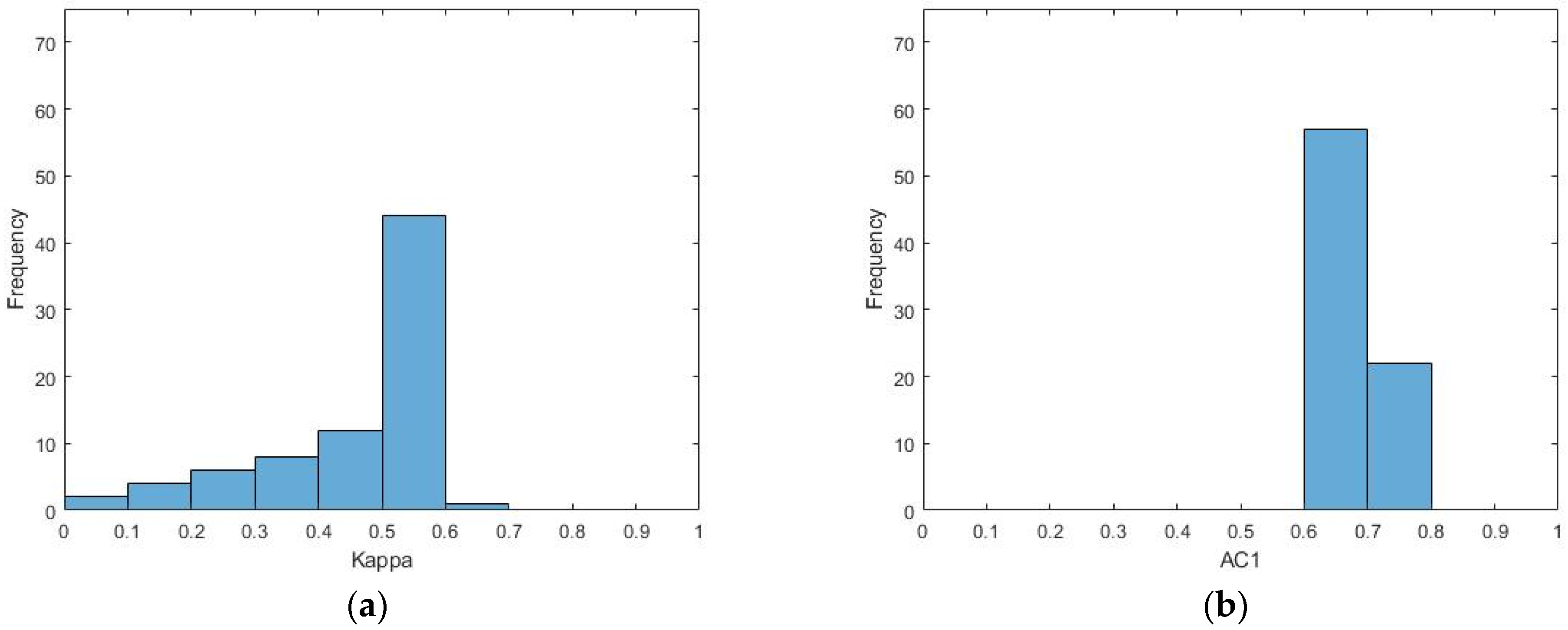

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter- Rater Agreement of Binary Outcomes and Multiple Raters | HTML

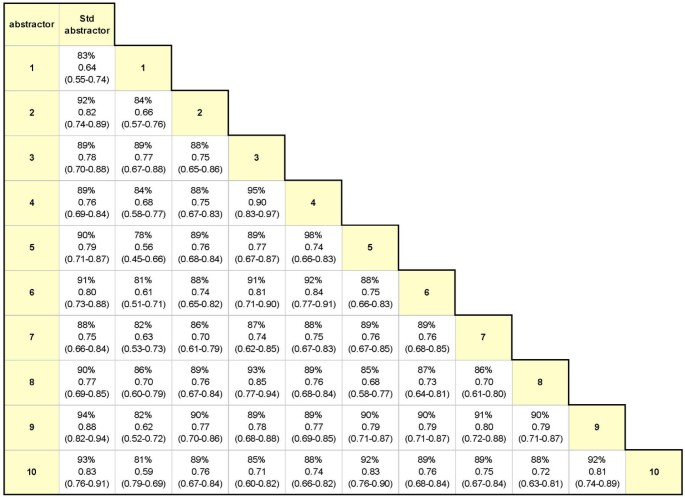

Examining intra-rater and inter-rater response agreement: A medical chart abstraction study of a community-based asthma care program | BMC Medical Research Methodology | Full Text

Inter-observer agreement and reliability assessment for observational studies of clinical work - ScienceDirect

Intra- and inter-rater reproducibility of ultrasound imaging of patellar and quadriceps tendons in critically ill patients | PLOS ONE

Inter-observer and intra-observer agreement in drug-induced sedation endoscopy — a systematic approach | The Egyptian Journal of Otolaryngology | Full Text

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

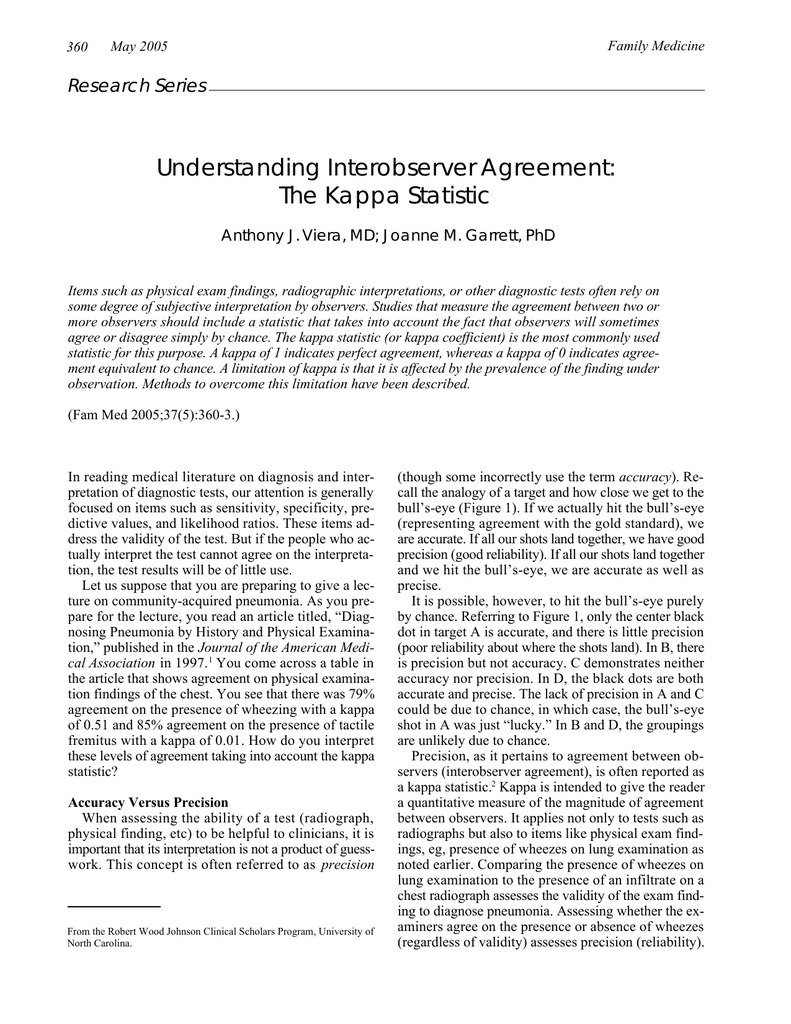

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table2-1.png)

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table3-1.png)